There was a time when I used to write documentation in sgml quite a lot. Usually I processed these input files with docbook2... to generate html, pdf, txt, etc.

Today I wanted to build an old project of mine that I hadn't looked at in about 4-5 years, including its documentation.

When docbook2... ran, however, it generated lots of:

jade:/etc/sgml/catalog:1:8:E: cannot open "/usr/share/sgml/CATALOG.xmlcharent" (No such file or directory)

jade:/etc/sgml/catalog:1:8:E: cannot open "/usr/share/sgml/CATALOG.xmlcharent" (No such file or directory)

jade:/etc/sgml/catalog:1:8:E: cannot open "/usr/share/sgml/CATALOG.xmlcharent" (No such file or directory)

jade:/etc/sgml/catalog:1:8:E: cannot open "/usr/share/sgml/CATALOG.xmlcharent" (No such file or directory)

jade:/etc/sgml/catalog:1:8:E: cannot open "/usr/share/sgml/CATALOG.xmlcharent" (No such file or directory)

jade:/etc/sgml/catalog:1:8:E: cannot open "/usr/share/sgml/CATALOG.xmlcharent" (No such file or directory)

jade:/etc/sgml/catalog:1:8:E: cannot open "/usr/share/sgml/CATALOG.xmlcharent" (No such file or directory)

jade:/etc/sgml/catalog:1:8:E: cannot open "/usr/share/sgml/CATALOG.xmlcharent" (No such file or directory)

jade:/etc/sgml/catalog:1:8:E: cannot open "/usr/share/sgml/CATALOG.xmlcharent" (No such file or directory)

jade:/etc/sgml/catalog:1:8:E: cannot open "/usr/share/sgml/CATALOG.xmlcharent" (No such file or directory)

jade:/etc/sgml/catalog:1:8:E: cannot open "/usr/share/sgml/CATALOG.xmlcharent" (No such file or directory)

jade:/etc/sgml/catalog:1:8:E: cannot open "/usr/share/sgml/CATALOG.xmlcharent" (No such file or directory)

jade:/etc/sgml/catalog:1:8:E: cannot open "/usr/share/sgml/CATALOG.xmlcharent" (No such file or directory)

jade:/etc/sgml/catalog:1:8:E: cannot open "/usr/share/sgml/CATALOG.xmlcharent" (No such file or directory)

jade:/etc/sgml/catalog:1:8:E: cannot open "/usr/share/sgml/CATALOG.xmlcharent" (No such file or directory)

jade:/etc/sgml/catalog:1:8:E: cannot open "/usr/share/sgml/CATALOG.xmlcharent" (No such file or directory)

jade:/etc/sgml/catalog:1:8:E: cannot open "/usr/share/sgml/CATALOG.xmlcharent" (No such file or directory)

jade:/etc/sgml/catalog:1:8:E: cannot open "/usr/share/sgml/CATALOG.xmlcharent" (No such file or directory)

On openSUSE, apparently, the xmlcharent stuff is provided by the package xmlcharent, but the sgml configuration file, /etc/sgml/catalog, is provided by the package sgml-skel.

The xmlcharent package went through some updates recently, and the /usr/share/sgml/CATALOG.xmlcharent file has been replaced by /usr/share/xmlcharent/xmlcharent.sgml. Therefore one needs to update /etc/sgml/catalog manually and change the first line from /usr/share/sgml/CATALOG.xmlcharent to /usr/share/xmlcharent/xmlcharent.sgml.

23 Dec 2015

30 Sept 2015

OE Build of glmark2 Running on Cubietruck with Mali

Here are the steps you can perform to use OpenEmbedded to build an image for the Cubietruck in order to run the glmark2-es2 benchmark accelerated via the binary-only mali user-space driver.

First off, grab the scripts that will guide you through your build:

$ git clone git://github.com/twoerner/oe-layerindex-config.git

Cloning into 'oe-layerindex-config'...

remote: Counting objects: 45, done.

remote: Total 45 (delta 0), reused 0 (delta 0), pack-reused 45

Receiving objects: 100% (45/45), 11.04 KiB | 0 bytes/s, done.

Resolving deltas: 100% (24/24), done.

Checking connectivity... done.

Kick off the build configuration by sourcing the main script

$ . oe-layerindex-config/oesetup.sh

This script will use wget to grab some items from the OpenEmbedded layer index, then ask you which branch you would like to use:

Choose "master".

Then you'll be asked which board you'd like to build for:

Scroll down and choose "cubietruck". At this point the scripts will download meta-sunxi for you and configure your build to use this BSP layer by adding it to your bblayers.conf.

Now you need to choose your distribution:

Select "meta-yocto". Now this layer will be fetched and added to your build. Since meta-yocto includes a number of poky variants we will be asked to choose one:

Select "poky".

We now need to tell our build where it can place its downloads (notice the question at the bottom of the following screen):

You can enter your download directory. This entry box uses readline which, among other things, allows you to use tab-completion when entering your download path. I would recommend using a fully-qualified path for this information. It is common to use one location for all the downloads of all builds taking place on a given machine.

Now your configuration step is mostly finished:

If you simply wanted to perform a small core-image-minimal build, you could go ahead and bitbake that up now. But we want to add glmark2 to our image, and its metadata isn't found in the layers we currently have. Therefore we need to add one more layer:

Before we can build, we need to tweak our configuration to let bitbake know which kernel we want to use, which u-boot we want to use, what packages we want to add to our image, and so forth. Edit conf/local.conf and make yours look similar to:

Now we are ready to build!

$ bitbake core-image-full-cmdline

When our build completes there are some warnings that are issued, we can safely ignore these for now:

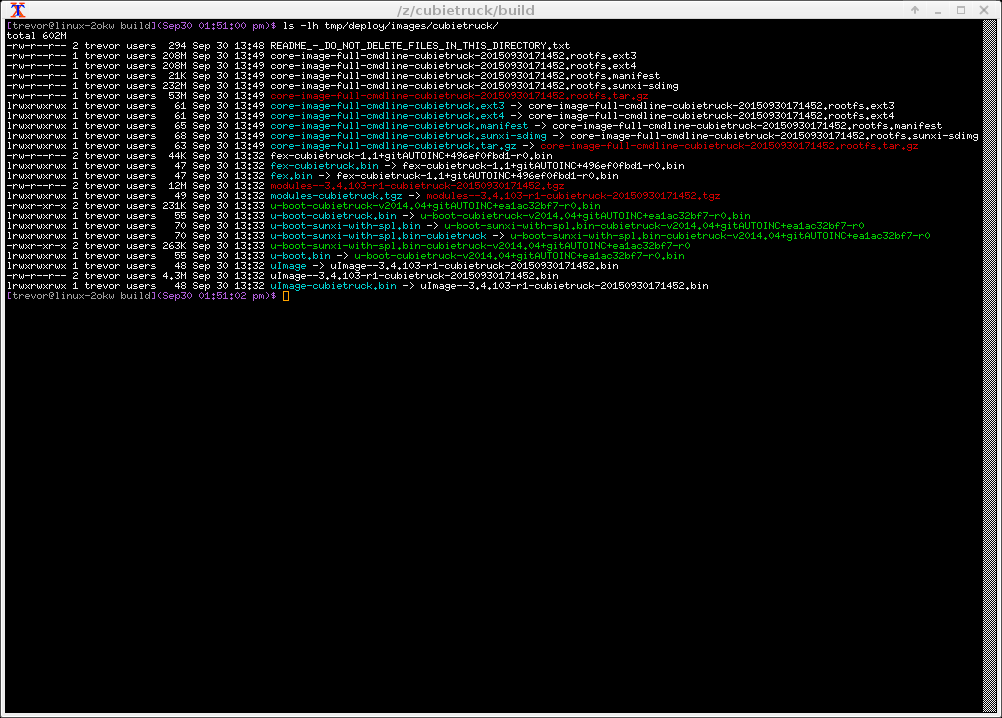

We will find our artifacts in:

Simply dd the sdimg to a microSD card:

# dd if=core-image-full-cmdline-cubietruck.sunxi-sdimg of=/dev/<your microSD device> bs=1M

Note that on some distributions you have to be the root user to perform the above step and that you need to figure out what jumble of letters to fill in for the <your microSD device> part.

Pop the microSD into the cubietruck's microSD slot, attach an HDMI monitor, a 3.3V console cable, and apply power.

When the device finishes booting you can now run the glmark2-es2 demo. Unfortunately it only works in full-screen mode. If you want to see which tests it is currently running and the current FPS count while the tests are running you can specify the --annotate option.

There are two common ways to do this:

There is also a "sunximali-test" demo app you can try.

Build Configuration:

BB_VERSION = "1.27.1"

BUILD_SYS = "x86_64-linux"

NATIVELSBSTRING = "openSUSE-project-13.2"

TARGET_SYS = "arm-poky-linux-gnueabi"

MACHINE = "cubietruck"

DISTRO = "poky"

DISTRO_VERSION = "1.8+snapshot-20150930"

TUNE_FEATURES = "arm armv7a vfp neon callconvention-hard vfpv4 cortexa7"

TARGET_FPU = "vfp-vfpv4-neon"

meta-sunxi = "master:14da837096f2c4bf1471b9cce5cf7fd30f55999b"

meta = "master:4a1dec5c61f73e7cfa430271ed395094bb262f6b"

meta-yocto = "master:613c38fb9b5f20a89ca88f6836a21b9c7604e13e"

meta-oe = "master:f4533380c8a5c1d229f692222ee0c2ef9d187ef8"

The conf/local.conf is:

PREFERRED_PROVIDER_virtual/kernel = "linux-sunxi"

PREFERRED_PROVIDER_u-boot = "u-boot-sunxi"

PREFERRED_PROVIDER_virtual/bootloader = "u-boot-sunxi"

DEFAULTTUNE = "cortexa7hf-neon-vfpv4"

CORE_IMAGE_EXTRA_INSTALL = "packagegroup-core-x11-base sunxi-mali-test glmark2"

MACHINE_EXTRA_RRECOMMENDS = " kernel-modules"

IMAGE_FSTYPES_remove = "tar.bz2"

DISTRO_FEATURES_append = " x11"

PACKAGECONFIG_pn-glmark2 = "x11-gles2"

PREFERRED_PROVIDER_jpeg = "jpeg"

PREFERRED_PROVIDER_jpeg-native = "jpeg-native"

PACKAGE_CLASSES ?= "package_ipk"

EXTRA_IMAGE_FEATURES = "debug-tweaks"

USER_CLASSES ?= "buildstats image-mklibs image-prelink"

PATCHRESOLVE = "noop"

OE_TERMINAL = "auto"

BB_DISKMON_DIRS = "\

STOPTASKS,${TMPDIR},1G,100K \

STOPTASKS,${DL_DIR},1G,100K \

STOPTASKS,${SSTATE_DIR},1G,100K \

ABORT,${TMPDIR},100M,1K \

ABORT,${DL_DIR},100M,1K \

ABORT,${SSTATE_DIR},100M,1K"

PACKAGECONFIG_append_pn-qemu-native = " sdl"

PACKAGECONFIG_append_pn-nativesdk-qemu = " sdl"

ASSUME_PROVIDED += "libsdl-native"

CONF_VERSION = "1"

First off, grab the scripts that will guide you through your build:

$ git clone git://github.com/twoerner/oe-layerindex-config.git

Cloning into 'oe-layerindex-config'...

remote: Counting objects: 45, done.

remote: Total 45 (delta 0), reused 0 (delta 0), pack-reused 45

Receiving objects: 100% (45/45), 11.04 KiB | 0 bytes/s, done.

Resolving deltas: 100% (24/24), done.

Checking connectivity... done.

Kick off the build configuration by sourcing the main script

$ . oe-layerindex-config/oesetup.sh

This script will use wget to grab some items from the OpenEmbedded layer index, then ask you which branch you would like to use:

Choose "master".

Then you'll be asked which board you'd like to build for:

Scroll down and choose "cubietruck". At this point the scripts will download meta-sunxi for you and configure your build to use this BSP layer by adding it to your bblayers.conf.

Now you need to choose your distribution:

Select "meta-yocto". Now this layer will be fetched and added to your build. Since meta-yocto includes a number of poky variants we will be asked to choose one:

Select "poky".

We now need to tell our build where it can place its downloads (notice the question at the bottom of the following screen):

You can enter your download directory. This entry box uses readline which, among other things, allows you to use tab-completion when entering your download path. I would recommend using a fully-qualified path for this information. It is common to use one location for all the downloads of all builds taking place on a given machine.

Now your configuration step is mostly finished:

If you simply wanted to perform a small core-image-minimal build, you could go ahead and bitbake that up now. But we want to add glmark2 to our image, and its metadata isn't found in the layers we currently have. Therefore we need to add one more layer:

Before we can build, we need to tweak our configuration to let bitbake know which kernel we want to use, which u-boot we want to use, what packages we want to add to our image, and so forth. Edit conf/local.conf and make yours look similar to:

Now we are ready to build!

$ bitbake core-image-full-cmdline

When our build completes there are some warnings that are issued, we can safely ignore these for now:

We will find our artifacts in:

Simply dd the sdimg to a microSD card:

# dd if=core-image-full-cmdline-cubietruck.sunxi-sdimg of=/dev/<your microSD device> bs=1M

Note that on some distributions you have to be the root user to perform the above step and that you need to figure out what jumble of letters to fill in for the <your microSD device> part.

Pop the microSD into the cubietruck's microSD slot, attach an HDMI monitor, a 3.3V console cable, and apply power.

When the device finishes booting you can now run the glmark2-es2 demo. Unfortunately it only works in full-screen mode. If you want to see which tests it is currently running and the current FPS count while the tests are running you can specify the --annotate option.

There are two common ways to do this:

- plug a USB keyboard into the cubietruck and type the command into the matchbox-terminal console

- # glmark2-es2 --fullscreen --annotate

- from the serial console run:

- # export DISPLAY=:0

- # glmark2-es2 --fullscreen --annotate

There is also a "sunximali-test" demo app you can try.

Build Help

If you're having trouble building there are a couple things you can try- If this is your first time using OpenEmbedded or you're rather new to it, you could try familiarizing yourself with the project and more basic builds to start:

- https://www.yoctoproject.org

- https://www.yoctoproject.org/documentation

- http://www.yoctoproject.org/docs/1.8/yocto-project-qs/yocto-project-qs.html

- In the build screenshot you can see the exact repositories my build is using, and what the latest commit was for each. You could try checking out those exact same commits for each of the layers being used to see if that helps.

Build Configuration:

BB_VERSION = "1.27.1"

BUILD_SYS = "x86_64-linux"

NATIVELSBSTRING = "openSUSE-project-13.2"

TARGET_SYS = "arm-poky-linux-gnueabi"

MACHINE = "cubietruck"

DISTRO = "poky"

DISTRO_VERSION = "1.8+snapshot-20150930"

TUNE_FEATURES = "arm armv7a vfp neon callconvention-hard vfpv4 cortexa7"

TARGET_FPU = "vfp-vfpv4-neon"

meta-sunxi = "master:14da837096f2c4bf1471b9cce5cf7fd30f55999b"

meta = "master:4a1dec5c61f73e7cfa430271ed395094bb262f6b"

meta-yocto = "master:613c38fb9b5f20a89ca88f6836a21b9c7604e13e"

meta-oe = "master:f4533380c8a5c1d229f692222ee0c2ef9d187ef8"

The conf/local.conf is:

PREFERRED_PROVIDER_virtual/kernel = "linux-sunxi"

PREFERRED_PROVIDER_u-boot = "u-boot-sunxi"

PREFERRED_PROVIDER_virtual/bootloader = "u-boot-sunxi"

DEFAULTTUNE = "cortexa7hf-neon-vfpv4"

CORE_IMAGE_EXTRA_INSTALL = "packagegroup-core-x11-base sunxi-mali-test glmark2"

MACHINE_EXTRA_RRECOMMENDS = " kernel-modules"

IMAGE_FSTYPES_remove = "tar.bz2"

DISTRO_FEATURES_append = " x11"

PACKAGECONFIG_pn-glmark2 = "x11-gles2"

PREFERRED_PROVIDER_jpeg = "jpeg"

PREFERRED_PROVIDER_jpeg-native = "jpeg-native"

PACKAGE_CLASSES ?= "package_ipk"

EXTRA_IMAGE_FEATURES = "debug-tweaks"

USER_CLASSES ?= "buildstats image-mklibs image-prelink"

PATCHRESOLVE = "noop"

OE_TERMINAL = "auto"

BB_DISKMON_DIRS = "\

STOPTASKS,${TMPDIR},1G,100K \

STOPTASKS,${DL_DIR},1G,100K \

STOPTASKS,${SSTATE_DIR},1G,100K \

ABORT,${TMPDIR},100M,1K \

ABORT,${DL_DIR},100M,1K \

ABORT,${SSTATE_DIR},100M,1K"

PACKAGECONFIG_append_pn-qemu-native = " sdl"

PACKAGECONFIG_append_pn-nativesdk-qemu = " sdl"

ASSUME_PROVIDED += "libsdl-native"

CONF_VERSION = "1"

27 May 2015

ARM SBCs and SoCs

The following table shows which boards are examples of which architectures/processors:

The following table shows the most likely big.LITTLE pairings:

If you have a manufacturer and/or SoC in mind and would like to know which board you'd need to buy:

Sources:

http://en.wikipedia.org/wiki/Comparison_of_single-board_computers

http://www.arm.com/products/processors/cortex-a/

https://www.96boards.org

http://www.linux.com/news/embedded-mobile/mobile-linux/831550-survey-best-linux-hacker-sbcs-for-under-200

22 May 2015

Work Area Wire Spool Holder

I'm happy I finally found the time and parts to put together a wire spool holder for my work area!

I found all the necessary parts at my local Home Depot: a threaded rod, angle brackets, couple nuts, couple screws. I did have to drill out one hole on each of the angle brackets in order to fit the rod I had chosen, but only by millimetres.

I found all the necessary parts at my local Home Depot: a threaded rod, angle brackets, couple nuts, couple screws. I did have to drill out one hole on each of the angle brackets in order to fit the rod I had chosen, but only by millimetres.

14 May 2015

Spelling and Grammar in a Technical Publication

Does it matter? To me it does. When I'm trying to read something technical and I come across errors in spelling and grammar (non-technical errors), it throws my concentration out the window. I'm not claiming to be an expert in the rules and spelling of the English language (or any language) and although you'll find errors in my writing, I do try and I do care.

I try to read as much as I can, and I particularly like books. In the computer field there are particular publishers, everyone in this field knows who they are, and everyone has their favourites. There's one in particular, unfortunately, who seems to do quite a bad job of editing. Their books seem to have higher-than-normal levels of non-technical errors making their books difficult for me to read. Some of their books are fine, which leads me to believe that most of the editing is left to the author, and we're simply observing her/his mastery of both the technical topic at hand, as well as the English language.

So I was quite pleased when this particular publisher got in touch with me last year to ask if I'd be interested in being a reviewer for some books that were in the process of being written. Here was my chance to not only review the technical details of a book, but to also try to make sure all the non-technical stuff was good too. Therefore, initially, I said "yes".

Then they sent me an email describing how to be a reviewer for them. One of the very first instructions clearly stated that my job was to review the technical aspects of the material and barred me from making any suggestions or corrections to any non-technical aspects of the book. So I then changed my mind and said "no". Looking at some of their publications, I wouldn't want someone to see my name as a "reviewer" and wonder how I didn't notice so many obvious mistakes!

This same publisher had a sale recently, so for a very cheap price I purchased a couple ebooks from them, one of which had not yet been published. I figured for such a low price I could take the risk they were poorly written and hope to glean any nuggets of technical information despite the distractions. The book was published the other day, and in what I see as a very ironic twist, they sent me the following email to inform me of the change in status:

:-)

PS I hope this post doesn't dissuade a technical person (perhaps a non-native speaker of the English language) from writing. I'm not trying to poke fun at peoples' language abilities. But as a publisher of books in the English language I think there's a higher standard to which the publisher needs to be held!

I try to read as much as I can, and I particularly like books. In the computer field there are particular publishers, everyone in this field knows who they are, and everyone has their favourites. There's one in particular, unfortunately, who seems to do quite a bad job of editing. Their books seem to have higher-than-normal levels of non-technical errors making their books difficult for me to read. Some of their books are fine, which leads me to believe that most of the editing is left to the author, and we're simply observing her/his mastery of both the technical topic at hand, as well as the English language.

So I was quite pleased when this particular publisher got in touch with me last year to ask if I'd be interested in being a reviewer for some books that were in the process of being written. Here was my chance to not only review the technical details of a book, but to also try to make sure all the non-technical stuff was good too. Therefore, initially, I said "yes".

Then they sent me an email describing how to be a reviewer for them. One of the very first instructions clearly stated that my job was to review the technical aspects of the material and barred me from making any suggestions or corrections to any non-technical aspects of the book. So I then changed my mind and said "no". Looking at some of their publications, I wouldn't want someone to see my name as a "reviewer" and wonder how I didn't notice so many obvious mistakes!

This same publisher had a sale recently, so for a very cheap price I purchased a couple ebooks from them, one of which had not yet been published. I figured for such a low price I could take the risk they were poorly written and hope to glean any nuggets of technical information despite the distractions. The book was published the other day, and in what I see as a very ironic twist, they sent me the following email to inform me of the change in status:

:-)

PS I hope this post doesn't dissuade a technical person (perhaps a non-native speaker of the English language) from writing. I'm not trying to poke fun at peoples' language abilities. But as a publisher of books in the English language I think there's a higher standard to which the publisher needs to be held!

8 May 2015

OE Builds in a VM

Everyone knows that doing an OE build can take a bit of time (there are good reasons for this being true) so it follows that performing an OE build in a VM will take even longer. But when you do a build "natively" you get to potentially use all of the computer's free memory and all of its processing power. A VM running on that same machine will, at most, only allow you to use half its processing power and memory.

The question I wanted to answer was: if I performed a build "natively" but only used half the memory+cpu of a computer, how would that compare to a build in a VM that thought it was using the full computer's resources but had been constrained to only half the resources due to the use of the VM?

When performing an OE build there are two variables you can set that allow you to restrict the amount of cpu resources that are used: PARALLEL_MAKE and BB_NUMBER_THREADS. But using these variables isn't really the same as building on a machine with half the processing resources; the initial parse, for example, will use all available cpu resources (it can't know how much you want to restrict the build until it has actually completed parsing all the configuration files). Plus, there are no OE variables you can tweak to say "only use this much memory during the build".

So in order to better measure a VM's performance we need to find a better way to perform a restricted "native" build (other than just tweaking some configuration parameters). Answer: cgroups.

To be honest, I was hoping that I could demonstrate that if I used qemu+kvm and used virtio drivers everywhere I could (disk, network, etc) that the performance of a VM would at least approach that of doing a "native" build that was restricted via cgroups to the same amount of resources as was available to the VM. My findings, however, didn't bear that out.

First I'll present my results (since that's really want you want to see), then I'll describe my test procedure (for you to pick holes in ;-) ). I tried my tests on two computers and ran each test 5 times.

First I ran a build on the "raw/native" computer using all its resources (C=1):

I'm using "C" to represent the CPU resources; "1" meaning "all CPUs", i.e. using a value of "oe.utils.cpu_count()" for both PARALLEL_MAKE and BB_NUMBER_THREADS. A value of "0.5" means I've adjusted these parameters to be half of what "oe.utils.cpu_count()" would give.

Then I ran the same build again on the "native" machine but this time using BB_NUMBER_THREAD/PARALLEL_MAKE to only use half the cpus/threads (C=0.5):

Counter-intuitively, when restricting the resources, the builds performed ever-so-slightly better than allowing the build to use of all the computer's resources. Perhaps these builds aren't CPU-bound, and this set just happened to come out slightly better than the "full resources" builds. Or (for this workload) these Intel CPUs aren't really able to make much use of CPU threads, it's CPU cores that count. (??)

Then I performed the same set of builds on the "native" computers, but after having restricted their resources via cgroups.

C=1 (restricted by half via cgroups, same for memory):

C=0.5 (further restricted by half (again) via cgroups, same for memory):

So there's obviously a difference between restricting a build's resources via BB_NUMBER_THREAD/PARALLEL_MAKE versus setting hard limits using cgroups. That's not too surprising. But again there's very little difference between using "cpu_count()" number of CPU resources versus using half that amount, in fact, for Computer 1 the build time improved slightly.

Now here's the part where I used a VM running under qemu+kvm. I had been hoping these times would be comparable to the times I obtained when restricting the build via cgroups, but that wasn't the case.

C=1 (restricted by half via VM, same for memory):

C=0.5 (further restricted again by half via VM, same for memory):

So, for example, say I'm running a shell, bash, and its PID is 1234. As root goto /sys/fs/cgroup and:

The question I wanted to answer was: if I performed a build "natively" but only used half the memory+cpu of a computer, how would that compare to a build in a VM that thought it was using the full computer's resources but had been constrained to only half the resources due to the use of the VM?

When performing an OE build there are two variables you can set that allow you to restrict the amount of cpu resources that are used: PARALLEL_MAKE and BB_NUMBER_THREADS. But using these variables isn't really the same as building on a machine with half the processing resources; the initial parse, for example, will use all available cpu resources (it can't know how much you want to restrict the build until it has actually completed parsing all the configuration files). Plus, there are no OE variables you can tweak to say "only use this much memory during the build".

So in order to better measure a VM's performance we need to find a better way to perform a restricted "native" build (other than just tweaking some configuration parameters). Answer: cgroups.

To be honest, I was hoping that I could demonstrate that if I used qemu+kvm and used virtio drivers everywhere I could (disk, network, etc) that the performance of a VM would at least approach that of doing a "native" build that was restricted via cgroups to the same amount of resources as was available to the VM. My findings, however, didn't bear that out.

First I'll present my results (since that's really want you want to see), then I'll describe my test procedure (for you to pick holes in ;-) ). I tried my tests on two computers and ran each test 5 times.

First I ran a build on the "raw/native" computer using all its resources (C=1):

| Computer 1 | Computer 2 | |

|---|---|---|

| 1 | 00:24:19 | 01:02:42 |

| 2 | 00:25:03 | 01:02:19 |

| 3 | 00:24:51 | 01:02:34 |

| 4 | 00:24:55 | 01:02:21 |

| 5 | 00:25:00 | 01:02:57 |

| avg | 00:24:50 | 01:02:35 |

I'm using "C" to represent the CPU resources; "1" meaning "all CPUs", i.e. using a value of "oe.utils.cpu_count()" for both PARALLEL_MAKE and BB_NUMBER_THREADS. A value of "0.5" means I've adjusted these parameters to be half of what "oe.utils.cpu_count()" would give.

Then I ran the same build again on the "native" machine but this time using BB_NUMBER_THREAD/PARALLEL_MAKE to only use half the cpus/threads (C=0.5):

| Computer 1 | Computer 2 | |

|---|---|---|

| 1 | 00:24:20 | 00:57:10 |

| 2 | 00:24:34 | 00:57:32 |

| 3 | 00:25:11 | 00:57:08 |

| 4 | 00:24:39 | 01:03:31 |

| 5 | 00:24:31 | 01:01:50 |

| avg | 00:24:39 | 00:59:26 |

Counter-intuitively, when restricting the resources, the builds performed ever-so-slightly better than allowing the build to use of all the computer's resources. Perhaps these builds aren't CPU-bound, and this set just happened to come out slightly better than the "full resources" builds. Or (for this workload) these Intel CPUs aren't really able to make much use of CPU threads, it's CPU cores that count. (??)

Then I performed the same set of builds on the "native" computers, but after having restricted their resources via cgroups.

C=1 (restricted by half via cgroups, same for memory):

| Computer 1 | Computer 2 | |

|---|---|---|

| 1 | 00:28:05 | 01:05:57 |

| 2 | 00:28:18 | 01:05:33 |

| 3 | 00:28:21 | 01:05:46 |

| 4 | 00:28:14 | 01:05:22 |

| 5 | 00:27:37 | 01:05:37 |

| avg | 00:28:07 | 01:05:39 |

C=0.5 (further restricted by half (again) via cgroups, same for memory):

| Computer 1 | Computer 2 | |

|---|---|---|

| 1 | 00:27:20 | 01:04:08 |

| 2 | 00:27:15 | 01:07:39 |

| 3 | 00:27:08 | 01:07:42 |

| 4 | 00:27:17 | 01:07:57 |

| 5 | 00:27:13 | 01:08:16 |

| avg | 00:27:15 | 01:07:08 |

So there's obviously a difference between restricting a build's resources via BB_NUMBER_THREAD/PARALLEL_MAKE versus setting hard limits using cgroups. That's not too surprising. But again there's very little difference between using "cpu_count()" number of CPU resources versus using half that amount, in fact, for Computer 1 the build time improved slightly.

Now here's the part where I used a VM running under qemu+kvm. I had been hoping these times would be comparable to the times I obtained when restricting the build via cgroups, but that wasn't the case.

C=1 (restricted by half via VM, same for memory):

| Computer 1 | Computer 2 | |

|---|---|---|

| 1 | 00:41:36 | 01:45:42 |

| 2 | 00:41:22 | 01:47:52 |

| 3 | 00:41:41 | 01:44:31 |

| 4 | 00:41:16 | 01:50:25 |

| 5 | 00:41:12 | 01:41:41 |

| avg | 00:41:25 | 01:46:02 |

C=0.5 (further restricted again by half via VM, same for memory):

| Computer 1 | Computer 2 | |

|---|---|---|

| 1 | 00:42:02 | 01:30:23 |

| 2 | 00:42:07 | 01:34:43 |

| 3 | 00:43:05 | 01:31:14 |

| 3 | 00:43:14 | 01:36:46 |

| 4 | 00:42:12 | 01:42:05 |

| avg | 00:42:32 | 01:35:02 |

Analysis

Using the first build as a reference (letting the build use all the resources it wants on a "raw/native" machine):- constraining the build to use half the machine's resources via cgroups results in build times that are from 4.90% to 13.22% slower.

- performing the same build in a qemu+kvm VM results in build times that are from 59.90% to 72.55% slower.

Specifics

- bitbake core-image-minimal

- fido release, e4f3cf8950106bd420e09f463f11c4e607462126, 2138 tasks

- DISTRO=poky (meta-poky)

- a "-c fetchall" was performed initially, then all directories but "conf" were deleted and the timed build was performed with "BB_NO_NETWORK=1"

- between each build everything would be deleted except for the "conf" directory; therefore no sstate or tmp or cache etc

- /tmp implemented on a tmpfs

- because the VMs had limited disk space, all builds were performed with "INHERIT+=rm_work"

- to help load/manage the VMs I use a set of scripts I created here: https://github.com/twoerner/qemu_scripts

- in the VMs, both the "Download" directory and the source/recipes are mounted and shared from the host (using virtio)

So, for example, say I'm running a shell, bash, and its PID is 1234. As root goto /sys/fs/cgroup and:

- mkdir cpuset/oebuild

- mkdir memory/oebuild

- echo "0-3" > cpuset/oebuild/cpuset.cpus

- echo 0 > cpuset/oebuild/cpuset.mems

- echo 8G > memory/oebuild/memory.limit_in_bytes

- echo 1234 > cpuset/oebuild/tasks

- echo 1234 > memory/oebuild/tasks

$ cat /proc/self/cgroupHere you see both the "memory" and "cpuset" cgroups are constrained by the oebuild cgroup for this process (i.e. /proc/self).

10:hugetlb:/

9:perf_event:/

8:blkio:/

7:net_cls,net_prio:/

6:freezer:/

5:devices:/

4:memory:/oebuild

3:cpu,cpuacct:/

2:cpuset:/oebuild

1:name=systemd:/user.slice/user-1000.slice/session-625.scope

23 Feb 2015

Bash Prompt Command Duration

My current bash prompt is fairly useful, it tells me on which machine it's running (useful for situations where I'm ssh'ed out to different places or running in one of multiple text-based VMs), it tells me which under which user I'm currently running, and it indicates where I am in the directory structure. If I happen to be located in a git repository it'll also tell me which branch is currently checked-out, and whether or not my working directory is clean.

A couple years back I added a timestamp: every time bash prints out my prompt, it prints out the date and time it did so. I found that I would write code using one or more terminals and I'd have another terminal for "make check". Since the testing could take a while I would often go off and do other things while waiting for it to complete. Sometimes I would go off for so long that, upon returning, I couldn't remember whether or not the tests were run after my most recent code changes! Comparing the time stamps on the various terminals (or on the code files) would quickly remind me whether or not the tests were run.

In recent years I've also taken to using "pushd" and "popd" quite a bit. In a strange way, pushing into other directories has sort of become a rather crude "todo list" in my workflow. I'll be working on task A, but then realize something else needs to be done. I'll "pushd" somewhere else to do task B and, if I remember to "popd", be brought back to task A. Trying to remember how many levels deep I was became difficult, so I added a "dirs:" count to my bash command line to keep track of how many times I had "pushd".

Since the pushd count proved so useful, I also added something similar to keep track of how many background tasks a given terminal had spawned; a "jobs:" count.

Recently I've come to realize that there's another piece of information I'd like my bash prompt to keep track of for me: what was the duration of the last command I just ran?

Often I'll do a "du -sh <dir>" on some directory to see where all my disk space is being eaten up. Often I can guess which directories contain few bytes and which contain a lot. But sometimes an innocuous looking directory will surprise me and "du" will take a long time. When it does finish my first question is invariably: how long did _that_ take?! Also I tend to do a lot of builds, and I'm always curious to know how long a build took. For commands that produce little output, figuring out the duration is easy: I just look at the current prompt's timestamp and compare it to the last prompt's timestamp. But for any command which produces lots of "scroll off the end of the scroll-back buffer" output I lose the ability to figure out if a command ended shortly after I gave up waiting and went to bed, or whether it finished just before I got up in the morning.

Therefore I recently tweaked my bash command-line prompt, yet again, to give me the duration of the last command that was run.

There are two "tricks" that were necessary to get this working successfully. The first is the "SECONDS" environment variable bash provides. If you haven't undefined it, or otherwise used it for your own purposes, bash provides an environment variable called "SECONDS" which, when read, gives you the number of seconds since a given bash instance was started. The second trick is using bash's "trap" functionality with the "DEBUG" signal. When using trap: "if a sigspec is DEBUG, the command arg is executed before every simple command".

Keeping track of the duration of any command is a simple matter of storing the current SECONDS at the start of the command, then comparing that value to the current SECONDS at the end of the command. We know when any new command is about to start thanks to the DEBUG trap, and we know when the command is done thanks to bash printing a new PS1 prompt.

You can find all the gory details here:

https://github.com/twoerner/myconfig/blob/master/bashrc

Final tweaks include not printing a duration if it is zero, and printing the duration in hh:mm:ss format if it is longer than 59 seconds.

A couple years back I added a timestamp: every time bash prints out my prompt, it prints out the date and time it did so. I found that I would write code using one or more terminals and I'd have another terminal for "make check". Since the testing could take a while I would often go off and do other things while waiting for it to complete. Sometimes I would go off for so long that, upon returning, I couldn't remember whether or not the tests were run after my most recent code changes! Comparing the time stamps on the various terminals (or on the code files) would quickly remind me whether or not the tests were run.

In recent years I've also taken to using "pushd" and "popd" quite a bit. In a strange way, pushing into other directories has sort of become a rather crude "todo list" in my workflow. I'll be working on task A, but then realize something else needs to be done. I'll "pushd" somewhere else to do task B and, if I remember to "popd", be brought back to task A. Trying to remember how many levels deep I was became difficult, so I added a "dirs:" count to my bash command line to keep track of how many times I had "pushd".

Since the pushd count proved so useful, I also added something similar to keep track of how many background tasks a given terminal had spawned; a "jobs:" count.

Recently I've come to realize that there's another piece of information I'd like my bash prompt to keep track of for me: what was the duration of the last command I just ran?

Often I'll do a "du -sh <dir>" on some directory to see where all my disk space is being eaten up. Often I can guess which directories contain few bytes and which contain a lot. But sometimes an innocuous looking directory will surprise me and "du" will take a long time. When it does finish my first question is invariably: how long did _that_ take?! Also I tend to do a lot of builds, and I'm always curious to know how long a build took. For commands that produce little output, figuring out the duration is easy: I just look at the current prompt's timestamp and compare it to the last prompt's timestamp. But for any command which produces lots of "scroll off the end of the scroll-back buffer" output I lose the ability to figure out if a command ended shortly after I gave up waiting and went to bed, or whether it finished just before I got up in the morning.

Therefore I recently tweaked my bash command-line prompt, yet again, to give me the duration of the last command that was run.

There are two "tricks" that were necessary to get this working successfully. The first is the "SECONDS" environment variable bash provides. If you haven't undefined it, or otherwise used it for your own purposes, bash provides an environment variable called "SECONDS" which, when read, gives you the number of seconds since a given bash instance was started. The second trick is using bash's "trap" functionality with the "DEBUG" signal. When using trap: "if a sigspec is DEBUG, the command arg is executed before every simple command".

Keeping track of the duration of any command is a simple matter of storing the current SECONDS at the start of the command, then comparing that value to the current SECONDS at the end of the command. We know when any new command is about to start thanks to the DEBUG trap, and we know when the command is done thanks to bash printing a new PS1 prompt.

You can find all the gory details here:

https://github.com/twoerner/myconfig/blob/master/bashrc

Final tweaks include not printing a duration if it is zero, and printing the duration in hh:mm:ss format if it is longer than 59 seconds.

29 Jan 2015

Qemu Networking Investigation - Details

Assuming you have a virtual machine on a disk image that you want to run in qemu such that:

- the target can access the network at large from inside the target

- you can access the target's network from the host

- you don't want to assign a static IP within the image itself but you want to be able to flexibly set up the image on any sort of network (class A, class B, class C) with any IP address without having to make changes to the image itself

- create the disk file

$ qemu-img create -f qcow2 myimage.qcow2 200G

- install your favourite distribution into the disk file

$ qemu-system-x86_64 \

-enable-kvm \

-smp 2 \

-cpu host \

-m 4096 \

-drive file=/.../myimage.qcow2,if=virtio \

-net nic,model=virtio \

-net user \

-cdrom /.../openSUSE-Tumbleweed-NET-x86_64-Snapshot20150126-Media.iso

- run the image

$ qemu-system-x86_64 \

-enable-kvm \

-cpu host \

-smp 6 \

-m 4096 \

-net nic,model=virtio \

-net user \

-drive file=/.../myimage.qcow2 \

-nographic

- tweak it to your preferences

edit /etc/default/grub, edit GRUB_CMDLINE_LINUX_DEFAULT:

to add

" console=ttyS0,115200"

to remove

"splash=silent quiet"

# grub2-mkconfig -o /boot/grub2/grub.cfg

configure /tmp for tmpfs, add the following to /etc/fstab:

none /tmp tmpfs defaults,noatime 0 0

- take a snapshot

$ qemu-img snapshot -c afterInstallAndConfig myimage.qcow2

- create a configuration file named CONFIG

checkenv() {

if [ -z "${!1}" ]; then

echo "required env var '$1' not defined"

exit 1

fi

}

findcmd() {

which $1 > /dev/null 2>&1

if [ $? -ne 0 ]; then

echo "can't find required binary: '$1'"

exit 1

fi

}

MACADDR=DE:AD:BE:EF:00:01

USERID=trevor

GROUPID=users

IPBASE=192.168.8.

HOSTIP=${IPBASE}1

- create a "super script" called start_vm

#!/bin/bash

if [ $# -ne 1 ]; then

echo "usage: $(basename $0) <image>"

exit 1

fi

source CONFIG

checkenv MACADDR

checkenv USERID

checkenv GROUPID

checkenv IPBASE

THISDIR=$(pwd)

IMAGE=$1

TAPDEV=$(sudo $THISDIR/qemu-ifup)

if [ $? -ne 0 ]; then

echo "qemu-ifup failed"

exit 1

fi

echo "tap device: $TAPDEV"

qemu-system-x86_64 \

-enable-kvm \

-cpu host \

-smp sockets=1,cores=2,threads=2 \

-m 4096 \

-drive file=$IMAGE,if=virtio \

-net nic,model=virtio,macaddr=$MACADDR \

-net tap,ifname=$TAPDEV,script=no,downscript=no \

-nographic

sudo $THISDIR/qemu-ifdown $TAPDEV

- create an "up" script called qemu-ifup

#!/bin/bash

source CONFIG

usage() {

echo "sudo $(basename $0)"

}

checkenv USERID

checkenv GROUPID

checkenv IPBASE

checkenv HOSTIP

findcmd tunctl

findcmd ip

findcmd iptables

findcmd dnsmasq

if [ $EUID -ne 0 ]; then

echo "Error: This script must be run with root privileges"

exit 1

fi

if [ $# -ne 0 ]; then

usage

exit 1

fi

TAPDEV=$(tunctl -b -u $USERID -g $GROUPID 2>&1)

STATUS=$?

if [ $STATUS -ne 0 ]; then

echo "tunctl failed:"

exit 1

fi

ip addr add $HOSTIP/32 broadcast ${IPBASE}255 dev $TAPDEV

ip link set dev $TAPDEV up

ip route add ${IPBASE}0/24 dev $TAPDEV

# setup NAT for tap$n interface to have internet access in QEMU

iptables -t nat -A POSTROUTING -j MASQUERADE -s ${IPBASE}0/24

echo 1 > /proc/sys/net/ipv4/ip_forward

echo 1 > /proc/sys/net/ipv4/conf/$TAPDEV/proxy_arp

iptables -P FORWARD ACCEPT

# startup dnsmasq

dnsmasq \

--strict-order \

--except-interface=lo \

--interface=$TAPDEV \

--listen-address=$HOSTIP \

--bind-interfaces \

-d \

-q \

--dhcp-range=${TAPDEV},${IPBASE}5,${IPBASE}20,255.255.255.0,${IPBASE}255 \

--conf-file="" \

> dnsmasq.log 2>&1 &

echo $! > dnsmasq.pid

echo $TAPDEV

exit 0

- create a "down" script called qemu-ifdown

#!/bin/bash

source CONFIG

checkenv IPBASE

findcmd tunctl

findcmd iptables

usage() {

echo "sudo $(basename $0) <tap-dev>"

}

if [ $EUID -ne 0 ]; then

echo "Error: This script (runqemu-ifdown) must be run with root privileges"

exit 1

fi

if [ $# -ne 1 ]; then

usage

exit 1

fi

TAPDEV=$1

tunctl -d $TAPDEV

# cleanup the remaining iptables rules

iptables -t nat -D POSTROUTING -j MASQUERADE -s ${IPBASE}0/24

# kill dnsmasq

if [ -f dnsmasq.pid ]; then

kill $(cat dnsmasq.pid)

rm -f dnsmasq.pid

rm -f dnsmasq.log

fi

- ??

- profit!

Qemu Networking Investigation - On The Shoulders Of Giants

If you create a virtual disk, install your favourite distribution on it, and want to run it with qemu without having to hard-wire a static IP inside the image itself then this post provides you with some things to consider.

In previous posts I looked at how The Yocto Project is able to accomplish these goals. It does so by being able to supply a kernel and a kernel append, separate from the filesystem image itself. An IP address can be provided in the kernel append, which allows you to specify a static IP... in a flexible way :-)

When you create a virtual machine of, say, your favourite distribution, the installation puts the VM's kernel inside the filesystem, which means it's not available outside the image for you to use the kernel append trick to specify an IP address.

A flexible way for a virtual machine to handle networking is to use dhcp. This requires a dhcp server to be available somewhere on the network which can assign an IP to whoever asks. dnsmasq provides dhcp server capabilities and provides lots of configurability. As I noted in previous blog posts, since we already have a mechanism for running up and down networking-related scripts before and after running a qemu image, there is no reason why we can't start and stop an appropriately configured dhcp server to satisfy our virtual machine. We just want to make sure the dhcp server is only listening on the virtual interface and not interfering with any other interface, or with any other dhcp server running on the network.

Building on the steps the scripts from The Yocto Project use, we can simply add a line to startup dnsmasq and configure it so that it only listens to the virtual tap device which has been setup (by the rest of the up script) for the virtual machine we are bringing up:

The really hard part was the --dhcp-range option. Originally I had only specified

which kept leading to the following error message:

Wow, how frustrating was that?! As it turns out, if you don't explicitly specify the mask, it assumes 255.255.255.255, leaving absolutely no space whatsoever from which to generate an address!

This is really the only tweak to The Yocto Project's qemu scripts you need in order to run an image in qemu without having to hard-code an IP address and allowing you to access services running on the target from the host.

Before your image is started:

In previous posts I looked at how The Yocto Project is able to accomplish these goals. It does so by being able to supply a kernel and a kernel append, separate from the filesystem image itself. An IP address can be provided in the kernel append, which allows you to specify a static IP... in a flexible way :-)

When you create a virtual machine of, say, your favourite distribution, the installation puts the VM's kernel inside the filesystem, which means it's not available outside the image for you to use the kernel append trick to specify an IP address.

A flexible way for a virtual machine to handle networking is to use dhcp. This requires a dhcp server to be available somewhere on the network which can assign an IP to whoever asks. dnsmasq provides dhcp server capabilities and provides lots of configurability. As I noted in previous blog posts, since we already have a mechanism for running up and down networking-related scripts before and after running a qemu image, there is no reason why we can't start and stop an appropriately configured dhcp server to satisfy our virtual machine. We just want to make sure the dhcp server is only listening on the virtual interface and not interfering with any other interface, or with any other dhcp server running on the network.

Building on the steps the scripts from The Yocto Project use, we can simply add a line to startup dnsmasq and configure it so that it only listens to the virtual tap device which has been setup (by the rest of the up script) for the virtual machine we are bringing up:

dnsmasq \

--strict-order \

--except-interface=lo \

--interface=$TAPDEV \

--listen-address=$HOSTIP \

--bind-interfaces \

-d \

-q \

--dhcp-range=${TAPDEV},${IPBASE}5,${IPBASE}20,255.255.255.0,${IPBASE}255 \

--conf-file="" \

> dnsmasq.log 2>&1 &

The really hard part was the --dhcp-range option. Originally I had only specified

--dhcp-range=${TAPDEV},${IPBASE}5,${IPBASE}20

which kept leading to the following error message:

dnsmasq-dhcp no address range available for dhcp request via tap0

Wow, how frustrating was that?! As it turns out, if you don't explicitly specify the mask, it assumes 255.255.255.255, leaving absolutely no space whatsoever from which to generate an address!

This is really the only tweak to The Yocto Project's qemu scripts you need in order to run an image in qemu without having to hard-code an IP address and allowing you to access services running on the target from the host.

Before your image is started:

- a virtual tap interface is created

- various ifconfig-fu is used to setup the host's side of this interface and to setup the correct routing

- iptables is used to enable NAT on the VM's network interface

- dnsmasq is run which can provide an IP of your choosing to the VM (provided your image is configured to use dhcp to obtain its IP address)

Qemu Networking Investigation - The Yocto Way

qemu provides many options for setting up lots of interesting things and for setting them up in lots of interesting ways. One such set of options allows you to configure how the networking is going to work between the host and the target.

Due to the fact there are so many different ways to do networking with a virtual machine, qemu simply provides the ability to run an "up" script and a "down" script. These scripts, which you can specify, are just hooks which allow you to do some funky networking fu just before qemu starts the image (up) and right after the image terminates (down).

If you do supply these scripts, and something within those scripts requires root privileges (which is most likely the case), then the entire qemu cmdline needs to be run as root. One of the clever things The Yocto Project's qemu scripts do is to not supply these scripts as part of the qemu invocation. It runs them, instead, just before and just after running the qemu command itself.

A "super script" is provided with The Yocto Project which runs the networking "up" script, then runs qemu (with all its options), then runs the networking "down" script. By doing it this way, the "super script" can simply run the up and down scripts with sudo, which gives an opportunity for the user to provide the required password. In this way the qemu image itself isn't run with root privileges, but simply with the privileges of the invoking user (which I assume is a regular, unprivileged user). In this way only the parts which really need root privileges (i.e. the networking up and down scripts) are run with root privileges, everything else is run as a regular user.

The Yocto Project's qemu "super script" is runqemu. runqemu very quickly runs runqemu-internal. It is runqemu-internal which invokes the networking up and down scripts. Within The Yocto Project the networking up script is runqemu-ifup and the networking down script is runqemu-ifdown.

I won't go into all the gory details here (you're welcome to look at The Yocto Project's scripts yourself) but at a high level the up script:

In order to assign an IP address to the VM, The Yocto Project builds the kernel as a separate entity, invokes qemu specifying the kernel, and provides a kernel cmdline append specifying the IP address to use for the target.

But what if you have used qemu to install a version of your favourite distribution? In this case the kernel is in the image itself, and doesn't exist outside of it. You could, theoretically, copy the kernel from inside the image to the host, but this gets messy if/when the distribution updates the kernel.

If your kernel is not outside your image then you can't use The Yocto Project's --append trick to specify an IP address for your virtual machine.

You could, during the course of installing and setting up your virtual machine, use your virtual machine distribution's networking tools to configure a static IP, but this means the networking up and down scripts would have to be tailored to match the virtual machine's static settings. This is less flexible.

My solution is to come in my next post.

Due to the fact there are so many different ways to do networking with a virtual machine, qemu simply provides the ability to run an "up" script and a "down" script. These scripts, which you can specify, are just hooks which allow you to do some funky networking fu just before qemu starts the image (up) and right after the image terminates (down).

If you do supply these scripts, and something within those scripts requires root privileges (which is most likely the case), then the entire qemu cmdline needs to be run as root. One of the clever things The Yocto Project's qemu scripts do is to not supply these scripts as part of the qemu invocation. It runs them, instead, just before and just after running the qemu command itself.

A "super script" is provided with The Yocto Project which runs the networking "up" script, then runs qemu (with all its options), then runs the networking "down" script. By doing it this way, the "super script" can simply run the up and down scripts with sudo, which gives an opportunity for the user to provide the required password. In this way the qemu image itself isn't run with root privileges, but simply with the privileges of the invoking user (which I assume is a regular, unprivileged user). In this way only the parts which really need root privileges (i.e. the networking up and down scripts) are run with root privileges, everything else is run as a regular user.

The Yocto Project's qemu "super script" is runqemu. runqemu very quickly runs runqemu-internal. It is runqemu-internal which invokes the networking up and down scripts. Within The Yocto Project the networking up script is runqemu-ifup and the networking down script is runqemu-ifdown.

I won't go into all the gory details here (you're welcome to look at The Yocto Project's scripts yourself) but at a high level the up script:

- uses tunctl to setup a new, virtual tap interface

- uses various ifconfig-fu to setup the host's side of the virtual interface as well as manipulate the host's routing tables

- fiddles with the host's firewall to enable NATing for the target

In order to assign an IP address to the VM, The Yocto Project builds the kernel as a separate entity, invokes qemu specifying the kernel, and provides a kernel cmdline append specifying the IP address to use for the target.

qemu ... --kernel <path/to/kernel> ... --append "ip=192.168.7.$n2::192.168.7.$n1:255.255.255.0"

But what if you have used qemu to install a version of your favourite distribution? In this case the kernel is in the image itself, and doesn't exist outside of it. You could, theoretically, copy the kernel from inside the image to the host, but this gets messy if/when the distribution updates the kernel.

If your kernel is not outside your image then you can't use The Yocto Project's --append trick to specify an IP address for your virtual machine.

You could, during the course of installing and setting up your virtual machine, use your virtual machine distribution's networking tools to configure a static IP, but this means the networking up and down scripts would have to be tailored to match the virtual machine's static settings. This is less flexible.

My solution is to come in my next post.

Qemu Networking Investigation - Introduction

The Yocto Project's qemu integration is quite fascinating. It allows you to build an image targetting a qemu machine, then run it with their runqemu command. Various Yocto magic comes together to run the image under qemu, including getting the networking right so you can access the target from your host.

If all you need to do with a qemu image, networking-wise, is to reach out and do something over tcp (e.g. surf the web, install updates, etc) then qemu's "-net user" mode is more than adequate. One of the great features of the "-net user" mode is that it doesn't require root permissions.

If, however, instead of initiating the connection from inside the image, you want to initiate the connection from (for example) the host to connect to something running on the qemu target (e.g. you want to ssh from your host to the target), then you'll need some more qemu networking magic. As I said, The Yocto Project's qemu-fu fully handles this scenario for you. All you need to do is supply a password for sudo!

However, there are a couple caveats. The networking that The Yocto Project sets up for you supplies a static IP to the image as it is being spun up. This is done by providing a kernel cmdline append providing the IP address to use. Providing a kernel cmdline append can only be done in qemu if the kernel lives outside the image. If you have created your own image which contains its kernel inside the root filesystem, you can't use this trick to assign an IP address.

This post and a number of follow-up posts titled "Qemu Networking Investigation" explore these issues. The first issue is figuring out how to get a qemu image to acquire an IP address without having to assign it statically inside the image itself when you can't assign it with a kernel cmdline append. The second issue is figuring out how to set up the networking so that you can reach services running inside the image.

Knowing these pieces of information are useful if you're using qemu to run, say, openSUSE or if you want to use The Yocto Project's *.vmdk option.

6 Jan 2015

The Yocto Project: Introducing devtool - updates!

Now that The Yocto Project's devtool is in the main trunk, some of the instructions from a previous post need to be updated. Here is a link to the updated instructions, enjoy!

Subscribe to:

Posts (Atom)